12 Apr 2018

Drive the Viewer with ART SMARTTRACK

I was in the Munich office where there is a space dedicated to VR. One of the things they are doing is using ART (Advanced Realtime Tracking) devices to drive VRED when showing a model (from 2:42 in the video):

We thought about looking into using the same devices to drive our Viewer. So I took a SMARTTRACK device and checked what data it's sending that I could use to manipulate the Viewer's camera.

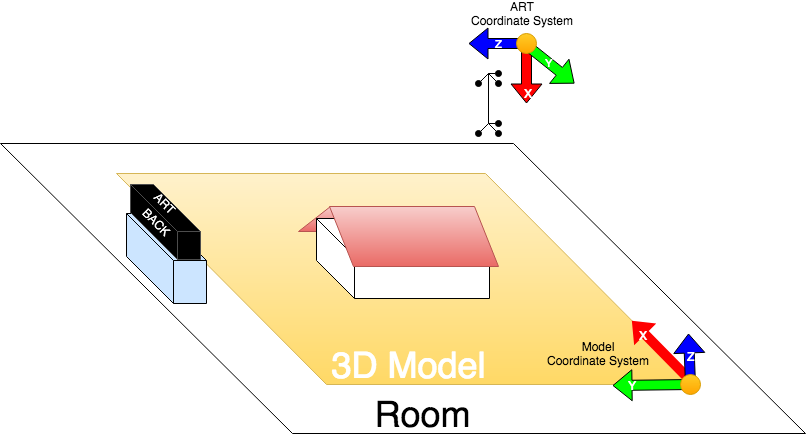

I'm not an expert on this device, so maybe things could have been set up differently, but based on the current settings the coordinate systems were like so:

So, the device's origin was about 1.5 metres away from it and half a metre above it and to its left.

Maybe the device could be set up in a way that its origin and axes would coincide with the ones used by the 3D model. In that case we would not have to translate the values its sending.

Also, usually in the Viewer the model is positioned in a way that the model's origin is at the centre of the model - i.e. in the above drawing the Model Coordinate System would be positioned in the centre of the house.

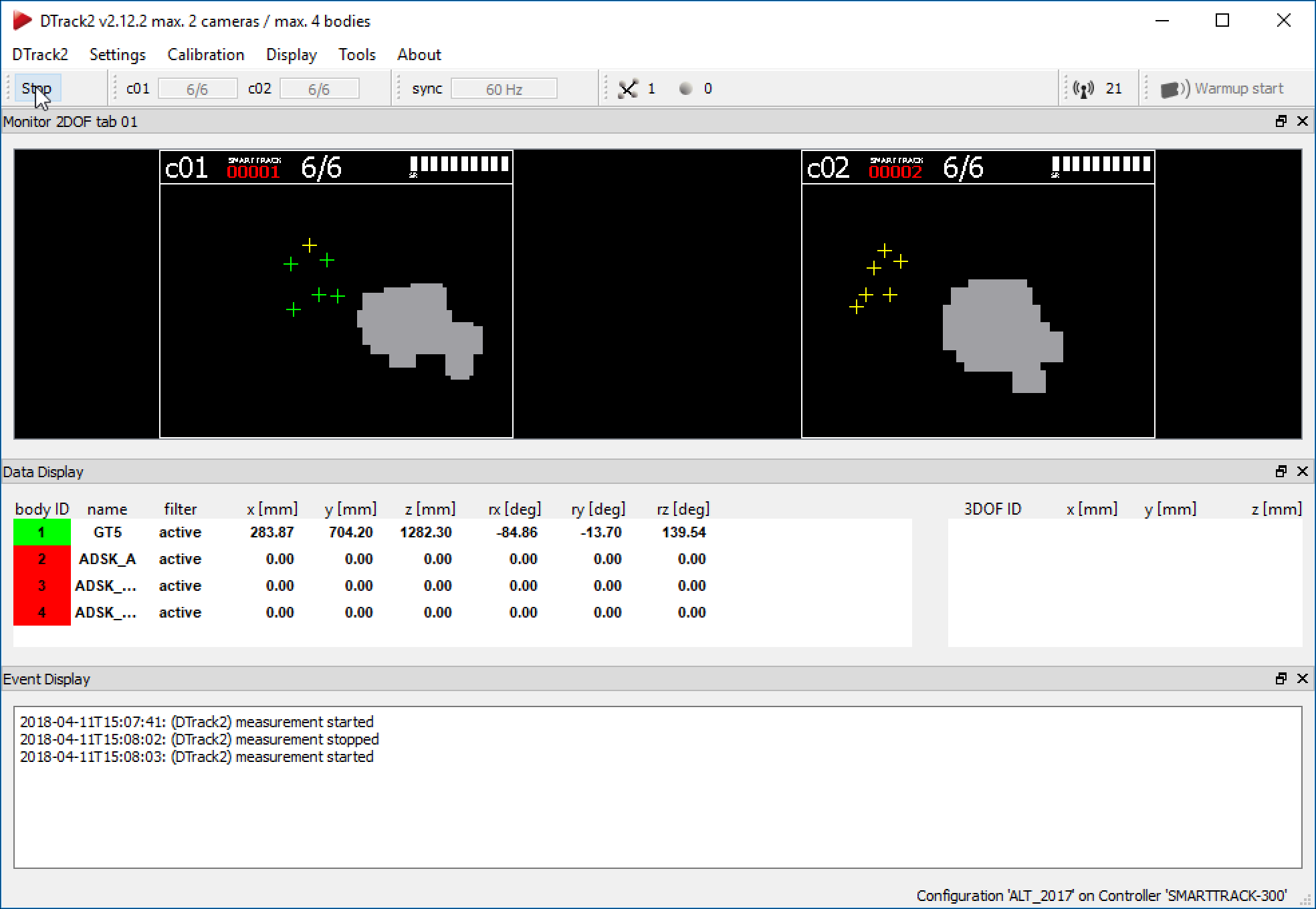

I also added the stick with the 6 spheres to the drawing (under the ART Coordinate System) showing the orientation when it is at 0° rotation around all axes. This is the object that the device is tracking. Here is one of the apps showing how that object is being tracked by the device:

In order to use the device to drive the Viewer's camera I had to do two things:

1) Make my server listen to the UDP messages sent by the device (as far as I can see this currently cannot be done directly in a web client)

2) Pass on the relevant messages to the browser based client - for this I used socket.io

I started off with the Model Derivative API sample and then added the extra code to it.

1) Server side

This is all the extra code I had to add to the start.js file that is run by the Node server:

// Listening to ART server's UDP messages

const dgram = require('dgram');

const udp_server = dgram.createSocket('udp4');

var _socket = null;

udp_server.on('error', (err) => {

console.log(`server error:\n${err.stack}`);

server.close();

});

udp_server.on('message', (msg, rinfo) => {

console.log(`server got: ${msg} from ${rinfo.address}:${rinfo.port}`);

if (_socket) {

var lines = msg.toString().split('\n');

for (var key in lines) {

// String is something like:

// 6di 4 [0 2 0.000][542.824 744.991 1309.946][0.041525 0.929455 0.366592 -0.998838 0.047598 -0.007540 -0.024457 -0.365853 0.930351] ...

// 6di 4 [id st er][x y z][3x3 trasformation matrix]

// id = identifier

// st = state: 0 = not tracked

// er = drift error estimate

if (lines[key].startsWith('6di')) {

// No need to pass on info if object is not tracked

if (!lines[key].startsWith("6di 4 [0 0")) {

_socket.emit('ART', lines[key]);

return;

}

}

}

}

});

udp_server.on('listening', () => {

const address = udp_server.address();

console.log(`server listening ${address.address}:${address.port}`);

});

udp_server.bind(5000);

// Socket io communication with browser client

var io = require('socket.io')(server);

io.on('connection', function(socket) {

console.log('a user connected (id=' + socket.id +')');

_socket = socket;

});2) Client side

The extra code added to the scripts.js file in www/js folder:

/////////////////////////////////////////////////////////////////

// ART SmartTrack

/////////////////////////////////////////////////////////////////

MyVars.startTracking = function (viewer, artSize, artTranslation) {

if (!MyVars._socket) {

MyVars._socket = io();

}

// We need perspecive view in order to go inside buildings, etc

viewer.navigation.toPerspective();

var _modelBox = viewer.model.getBoundingBox();

var _modelSize = _modelBox.min.distanceTo(_modelBox.max);

var _scale = _modelSize / artSize;

function setCamera(viewer, positionValues, matrixValues) {

// This is used to transformt the camera positions so that XY is the horizontal plane and

// Z is the up vector

// In case of camera:

// Z is distance from camera >> Viewer X

// X is up and down >> Viewer Z

// Y is left and right >> Viewer Y

var positionTransform = new THREE.Matrix4();

positionTransform.set(

0, -_scale, 0, artTranslation.y * _scale,

0, 0, _scale, -artTranslation.z * _scale,

-_scale, 0, 0, artTranslation.x * _scale,

0, 0, 0, 1);

var camera = new THREE.Matrix4();

camera.set(

matrixValues[0], matrixValues[3], matrixValues[6], 0,

matrixValues[1], matrixValues[4], matrixValues[7], 0,

matrixValues[2], matrixValues[5], matrixValues[8], 0,

0, 0, 0, 1);

var position = new THREE.Matrix4();

position.set(

1, 0, 0, positionValues[0],

0, 1, 0, positionValues[1],

0, 0, 1, positionValues[2],

0, 0, 0, 1);

position = positionTransform.multiply(position);

camera = positionTransform.multiply(camera);

// Main camera vectors

var x = new THREE.Vector3(), y = new THREE.Vector3(), z = new THREE.Vector3();

camera.extractBasis(x, y, z);

// Eye / Position

var eye = new THREE.Vector3();

eye.setFromMatrixPosition(position);

// Target / Center

var target = new THREE.Vector3().copy(eye);

target.add(x.setLength(2));

// UpVector

var up = z.setLength(1);

// Set values

viewer.navigation.setTarget(target);

viewer.navigation.setPosition(eye);

viewer.navigation.setCameraUpVector(up);

}

MyVars._socket.on('ART', function (msg) {

// Will be something like:

// 6di 4 [0 0 0.000][0.000 0.000 0.000][0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000]

// [1 0 0.000][0.000 0.000 0.000][0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000]

// [2 0 0.000][0.000 0.000 0.000][0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000]

// [3 0 0.000][0.000 0.000 0.000][0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000]

//console.log(msg);

if (MyVars.viewer.navigation) {

var arrays = msg.split("[");

var positionStrings = arrays[2].replace("]", "").split(" ");

var matrixStrings = arrays[3].replace("] ", "").split(" ");

var positionValues = positionStrings.map(v => parseFloat(v));

var matrixValues = matrixStrings.map(v => parseFloat(v));

setCamera(MyVars.viewer, positionValues, matrixValues);

}

});

}Then I just had to call this function once the geometry has actually loaded - there is not much point listening to those events before that. So I create an event listener for the Viewer and call the startTracking() function from there:

viewer.addEventListener(

Autodesk.Viewing.GEOMETRY_LOADED_EVENT,

function (event) {

MyVars.startTracking(viewer, 1500, {x: 800, y: 500, z: 1000});

}

);Here is the sample in action:

The finished PoC (Proof of Concept) is in the ART branch of the github repo: https://github.com/Autodesk-Forge/model.derivative-nodejs-sample/tree/ART