7 Jun 2017

Nailing large files uploads with Forge resumable API

This post focuses on a precise and crucial task your Web Application may need at some point: gracefully handling large files uploads.

To achieve this The Forge DataManagement API provides a specific endpoint:

PUT buckets/:bucketKey/objects/:objectName/resumable

You can find some example of use in that documentation page, however writing the complete chunking and upload logic based on that sole information is a bit tricky... Look no further, I dig it all up for you ;)

I wanted to write a robust piece of code that can be as easy as possible to reuse in another project, able to handle large files of any size, respecting the recommended practices and to top it up I was also interested in conveying the upload progress back to the caller, nothing more frustrating than waiting for an upload to complete without knowing how far it is in the process.

The following server side code is using Node.js and the official Forge SDK npm package, however it should be relatively straightforward to adapt it to any other server side programming language. First of all, for security reasons it is highly recommended to perform all your Forge API calls from the server, so you do not need to share a token with the client application. For this reason uploading a file to Forge from a web page is a two-steps process: upload the file from your client to your server, once the first upload is complete, upload from your server to Forge. I only focus on the second part here, uploading from a web page to your server really depends on the tech and libraries you are using. I personally go with the combo: Dropzone + Multer.

Let's take a look at the Node.js code of my OSS Service, this is the component that handles all REST API calls (through the SDK) to DataManagement API. This code will create an array of tasks, each one responsible to upload a chunk of the file. It then uses the async/eachLimit function to concurrently run a delimited number of upload tasks:

/////////////////////////////////////////////////////////

// Uploads object to bucket using resumable endpoint

//

/////////////////////////////////////////////////////////

uploadObjectChunked (getToken, bucketKey, objectKey,

file, opts = {}) {

return new Promise((resolve, reject) => {

const chunkSize = opts.chunkSize || 5 * 1024 * 1024

const nbChunks = Math.ceil(file.size / chunkSize)

const chunksMap = Array.from({

length: nbChunks

}, (e, i) => i)

// generates uniques session ID

const sessionId = this.guid()

// prepare the upload tasks

const uploadTasks = chunksMap.map((chunkIdx) => {

const start = chunkIdx * chunkSize

const end = Math.min(

file.size, (chunkIdx + 1) * chunkSize) - 1

const range = `bytes ${start}-${end}/${file.size}`

const length = end - start + 1

const readStream =

fs.createReadStream(file.path, {

start, end

})

const run = async () => {

const token = await getToken()

return this._objectsAPI.uploadChunk(

bucketKey, objectKey,

length, range, sessionId,

readStream, {},

{autoRefresh: false}, token)

}

return {

chunkIndex: chunkIdx,

run

}

})

let progress = 0

// runs asynchronously in parallel the upload tasks

// number of simultaneous uploads is defined by

// opts.concurrentUploads

eachLimit(uploadTasks, opts.concurrentUploads || 3,

(task, callback) => {

task.run().then((res) => {

if (opts.onProgress) {

progress += 100.0 / nbChunks

opts.onProgress ({

progress: Math.round(progress * 100) / 100,

chunkIndex: task.chunkIndex

})

}

callback ()

}, (err) => {

console.log('error')

console.log(err)

callback(err)

})

}, (err) => {

if (err) {

return reject(err)

}

return resolve({

fileSize: file.size,

bucketKey,

objectKey,

nbChunks

})

})

})

}You can notice that if the opts.onProgress callback argument is provided, it will use it to notify the caller of the upload progress. A word of caution: the recommended chunk size is 5MB and the minimum chunk size is 2MB. You cannot chunk a file smaller than 2MB otherwise you will receive a mysterious 416 (Requested Range Not Satisfiable) error.

Now the snippet below is how I am invoking that method of the service in my endpoint. Here in order to notify the caller (web client) of that endpoint, I am using a socket. The socketId is passed as body parameter of the request as it could be optional:

/////////////////////////////////////////////////////////

// upload resource

//

/////////////////////////////////////////////////////////

router.post('/buckets/:bucketKey',

uploadSvc.uploader.any(),

async(req, res) => {

try {

const file = req.files[0]

const forgeSvc = ServiceManager.getService('ForgeSvc')

const ossSvc = ServiceManager.getService('OssSvc')

const bucketKey = req.params.bucketKey

const objectKey = file.originalname

const opts = {

chunkSize: 5 * 1024 * 1024, //5MB chunks

concurrentUploads: 3,

onProgress: (info) => {

const socketId = req.body.socketId

if (socketId) {

const socketSvc = ServiceManager.getService(

'SocketSvc')

const msg = Object.assign({}, info, {

bucketKey,

objectKey

})

socketSvc.broadcast (

'progress', msg, socketId)

}

}

}

const response =

await ossSvc.uploadObjectChunked (

() => forgeSvc.get2LeggedToken(),

bucketKey,

objectKey,

file, opts)

res.json(response)

} catch (error) {

console.log(error)

res.status(error.statusCode || 500)

res.json(error)

}

})This endpoint will be called by Dropzone on my client and the file upload will be handled automatically by Multer. Just painless! Dropzone also provides your client with a progress callback that you can use to display some indication visually to the end user. It is up to you to indicate how you will show that progress, remember that the file is upload twice, but you don't necessarily need to show that to the user, each upload could be counted as 50% of the complete job.

Et voila! You can find the complete source code of my project there:

forge-boilers.nodejs/5 - viewer+server+oss+derivatives

And the live demo at https://oss.autodesk.io

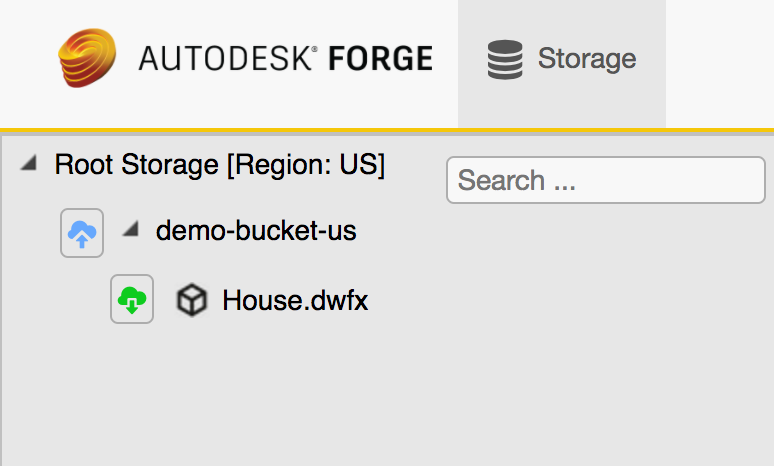

Below is the browser console output when uploading a 17.4 MB file. You can notice that the chunks are not necessarily being completed in order but it doesn't matter as long as each chunk is successful the file will be reassembled on the Forge server:

Initialize upload client -> server:

File {upload: Object, status: "added",name: "House.dwfx"…}

upload client -> server:

progress: 16.38

upload client -> server:

progress: 20.99

upload client -> server:

progress: 25.70

//more of those ...

upload client -> server:

progress: 100

upload server -> forge:

Object {progress: 25, chunkIndex: 2, bucketKey: "demo-bucket-us", objectKey: "House.dwfx"}

upload server -> forge:

Object {progress: 50, chunkIndex: 0, bucketKey: "demo-bucket-us", objectKey: "House.dwfx"}

upload server -> forge:

Object {progress: 75, chunkIndex: 3, bucketKey: "demo-bucket-us", objectKey: "House.dwfx"}

upload server -> forge:

Object {progress: 100, chunkIndex: 1, bucketKey: "demo-bucket-us", objectKey: "House.dwfx"}

upload complete:

Object {fileSize: 17401815, bucketKey: "demo-bucket-us", objectKey: "House.dwfx", nbChunks: 4}